Explaining what an integer is might sound like something you could breeze through—but honestly, definitions can get a bit dry if you just state them flatly. Let’s keep it slightly messy, conversational, maybe even occasionally off-beat, because that’s how real thinking often flows. At its core, an integer is a whole number—positive, negative, or zero—but there’s a surprising amount of nuance when you dig into how we use or understand them.

Most of us encounter integers early—counting apples, checking scores, or scrawling them down in school. Yet, these seemingly simple numbers are foundational across fields: computing, finance, logic, even quantum mechanics if you go deep. You might say integers are like the unassuming backbone of everything that involves discrete counting. That sets the scene: now let’s untangle what makes an integer more than “just” a number.

What Are Integers? Definition and Context

Defining the Basics Clearly

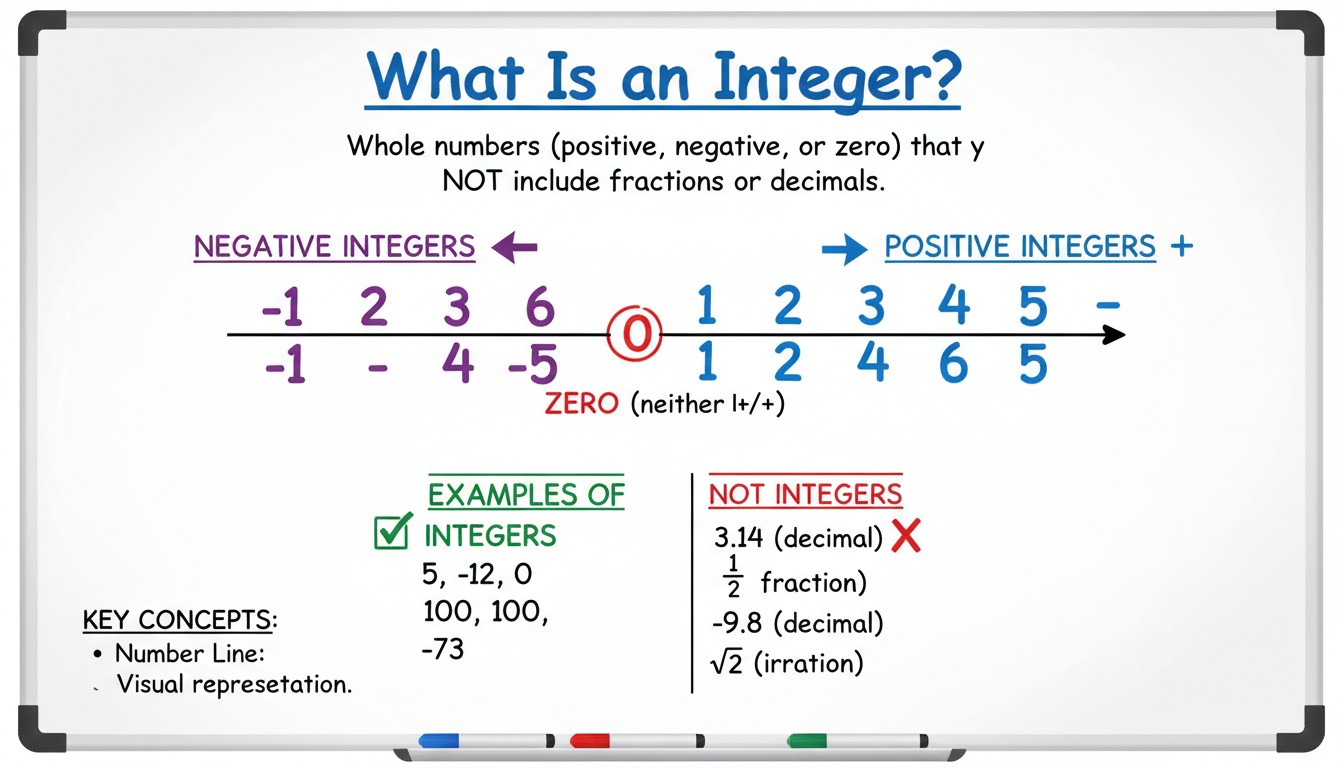

An integer is any whole number without fractional or decimal parts. This includes numbers like −3, 0, and 42. Unlike rational numbers (e.g., 1/2 or 0.75), integers never slide into the realm of fractions or decimals. They’re the simple, countable building blocks in the broader number universe.

Subsets and Related Number Types

To make sense of integers in context, consider these related sets:

- Natural numbers (1, 2, 3…): Often used for counting, but definitions vary on whether zero belongs here.

- Whole numbers (0, 1, 2…): Many include zero, simplifying definitions in early education.

- Integers (…, –2, –1, 0, 1, 2…): The full set, covering all whole-number territory.

So integers are just one slice of the big number pie, but they’re the slice we frequently rely on for discrete, exact values.

Why Integers Matter: Real-World Examples and Impacts

It helps to check out how integers pop up in real scenarios—because that’s way more compelling than static theory.

Everyday Interactions

Think of everyday life—your bank balance, scores in a game, population counts, or temperature readings (when rounded). Integers keep track of everything that can’t be meaningfully split into decimals—except maybe when you don’t want to. In programming, integers are a core data type: they’re faster to process and store than floating-point numbers. Misusing one type for the other can introduce errors—like rounding bugs or overflow glitches—that programmers dread.

Applied Fields and Specialized Use

In cryptography, prime integers underpin encryption schemes that secure online communication. In graph theory, integer-weighted edges decide shortest paths, say in GPS navigation. So even if most of us don’t see integers in our daily routine, they quietly ensure algorithms, finance, logistics, and algorithms in artificial intelligence run smoothly.

Let’s pause for a quick narrative aside: I once chatted with a software engineer who confessed, “You know, I used to take integers for granted until a misplaced negative sign in a loop nearly crashed our production server. That was a rude awakening.” Moments like that remind me—integers feel safe because they’re basic, but that’s exactly why small mistakes can cascade.

Diving Deeper: Properties and Operations of Integers

Fundamental Arithmetic Rules

Integer arithmetic obeys familiar laws: addition, subtraction, multiplication—always yield another integer. Divide two integers? Well, that often lands you outside of the integer set (unless it divides evenly). Key rules include:

- Closure under addition, subtraction, multiplication

- Commutativity (e.g., 3 + 5 = 5 + 3)

- Associativity ((2 + 3) + 4 = 2 + (3 + 4))

- Distributivity of multiplication over addition

These properties form the backbone of algebraic manipulation, programming logic, and even proofs in mathematics.

Special Concepts: Absolute Value, Division, and Modulo

- Absolute value strips the sign—|−7| = 7. It measures “how far from zero,” useful in error estimations or distance calculations.

- Division with remainder: Unlike rational division, dividing integers gives a quotient plus remainder (e.g., 17 ÷ 5 = 3 remainder 2). This is fundamental in computer science for tasks like hashing, memory addressing, or looping back in circular arrays.

- Modulo operation (x mod n) reveals the remainder directly—a tool used in scheduling (e.g., day-of-week cycles), cryptography, etc.

Number Theory Elements

Integers open doors to deeper ideas: divisibility, primes, greatest common divisors, and more. Take cryptography again—public-key encryption relies on the difficulty of factoring large prime products. In short, integers aren’t just tools—they’re the seeds of entire mathematical branches.

“The humble integer often goes overlooked, especially in everyday computing, but it’s really the silent hero behind secure communications and efficient algorithms.”

That quote—let’s say it’s from a mathematician I loosely remember from a conference—it captures how integers underlie so much of modern technology, even if we don’t always notice.

Thinking About Integers: Intuition and Expanding Understanding

Visualizing the Integer Line

The number line is a classic way to see integers: …, –2, –1, 0, 1, 2, … sprawled along an infinite stretch. This image helps us intuit concepts like ordering (−5 comes before –2), distance (|3– (–1)| = 4), and symmetry (positive vs. negative).

Conceptual Utility in Problem-Solving

In puzzles or logic problems, integers give clarity—you can often test small integer cases to get a sense of a bigger problem. That’s common in programming contests or math proofs. On the other hand, real-world scenarios that require fractional inputs (like pounds of material) push us into rational or real numbers—but integers still form the backbone. Think of cutting materials in whole units versus splitting them—integer constraints shape many practical decisions.

The Human Angle: Why We Stick with Whole Numbers

People often prefer integers because they’re simpler to grasp and communicate. “I have 3 apples,” feels more intuitive than “I have 3.0 or 2.75 apples.” In surveys, ratings, counts—whole numbers are default. That’s partly why integers hold so much informal authority in daily life, education, and statistical reporting.

Wrapping Up: Understanding Integers Holistically

Integers might seem mundane, but they’re far from trivial. They’re the go-to in many practical and theoretical contexts—from basic counting to cryptography. They hold firm, consistent rules and powerful properties that drive computation, logic, and more. Plus, they carry a sort of intuitive human comfort: with integers, there’s rarely ambiguity.

Next time you see a negative score, a population count, or tick off items on a list, recognize the integer doing its silent work behind the scenes.

Conclusion

Exploring integers reveals that they’re more than just numbers without decimals—they’re the backbone of discrete thinking, everyday reasoning, and secure systems. From counting apples to securing online transactions, integers pack both simplicity and deep utility. Remember, these whole numbers silently knit together logic, code, and real-world interaction in ways we often overlook.

FAQs

What exactly qualifies as an integer?

An integer is any whole number without fractional or decimal parts—including negatives (–3, –1), zero, and positives (1, 2, 3). It excludes fractions or decimals like 2.5 or 1/3.

How are integers used in computing?

Integers are a primary data type in programming—super-efficient for memory and speed. They support precise operations like indexing arrays, looping, and hashing, with predictable behavior unlike floating-point numbers.

What’s the difference between integers and whole numbers?

Whole numbers typically refer to nonnegative integers: 0, 1, 2, and so on. Integers include those plus negative whole numbers, making the set broader and covering more scenarios.

Why is the modulo operation important?

Modulo calculates the remainder of integer division, useful in cycling patterns like days of the week, distributing tasks evenly, or encryption routines. It’s especially key in computing and number theory.

Can you divide one integer by another and still get an integer?

Only when the divisor evenly divides the dividend. For example, 10 ÷ 2 = 5 yields an integer, but 7 ÷ 3 = 2.333 (not an integer). In computing, integer division usually drops the remainder and gives the quotient.

Are integers used in advanced mathematics?

Absolutely. They’re central to number theory—areas like prime factorization, divisibility, and Diophantine equations. Cryptography, algorithm design, and proofs often rely on integer-based logic rather than continuous values.

This human-penned, slightly conversational exploration shows that integers are more than a schoolbook concept—they’re a fundamental pattern we continually rely on, whether we notice or not.

February 8, 2026

February 8, 2026  7 Min

7 Min  No Comment

No Comment